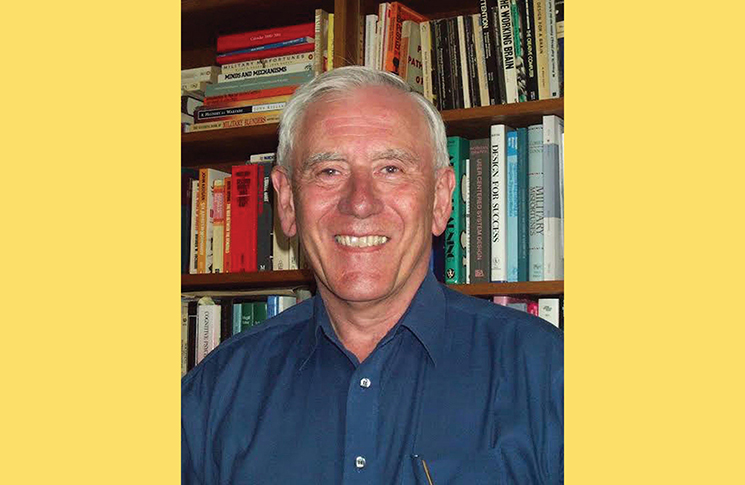

Professor James Reason CBE, 1938–2025

Since the early 1990s, airline travel has become safer by an order of magnitude, when number of passengers flown is compared to fatal accidents. Many systems and technologies are responsible for this very high (although tragically, not perfect) level of safety assurance, but prominent among the contributors is the work of an English psychologist and occasional private pilot. His combination of academic rigour, verbal flair and self-analytic humility created a new field of study with life-saving real-world benefits.

He was Professor James Reason CBE who died on 4 February 2025, aged 86. He changed aviation safety with a new way of thinking about accidents that revealed ways to prevent them recurring. His research was originally published as ‘The Contribution of Latent Human Failures to the Breakdown of Complex Systems’. Unsurprisingly, it became abbreviated as the Swiss cheese model. It was a simple, elegantly stated and understandable idea, expressible in a simple diagram. Defences against accidents had holes in them, like Swiss cheese, and sometimes these holes lined up to allow what Reason called ‘a trajectory of accident opportunity’ to pass through.

Reason changed aviation safety with a new way of thinking about accidents that revealed ways to prevent them recurring.

The feline contribution

Reason’s career-defining insight might never have happened if not for a domestic cat. He told the story in his wryly titled autobiography, A life in error.

One afternoon in the early 1970s, I was boiling a kettle of tea. The teapot (those were the days when tea leaves went into the pot rather than teabags) was waiting open-topped on the kitchen surface. At that moment, the cat – a very noisy Burmese – turned up at the nearby kitchen door, howling to be fed. I have to confess I was slightly nervous of this cat and his needs tended to get priority. I opened a tin of cat food, dug in a spoon and dolloped a large spoonful of cat food into the teapot.

After, swearing at the cat, Reason pondered the nature of his ‘behavioural spoonerism’. He realised it was neither intentional nor random. From this evolved a theory of accident causation that examined the interaction between human actions, preconditions and defences, or barriers. In aviation these barriers include maintenance systems, standard operating procedures and checklists, pilot licensing, ATC and airspace design.

Australian safety pioneer Rob Lee of the Bureau of Air Safety Investigation coined the term Swiss cheese to describe how the barriers in Reason’s theory contained metaphorical holes that opened, closed and moved.

‘I am indebted to him, because I love the name,’ Reason said. He memorably demonstrated the concept in a safety promotion video by visiting an English delicatessen, ordering several slices of Swiss Emmental cheese and holding them to the camera while describing his theory.

Swiss cheese was a practical and easily understandable expression of systems theory, which broadened attention from errors and supposed failings of individuals, to the design of the systems in which they operated.

Another area where the Swiss cheese model demonstrated its value was in health care, Reason’s primary area of research since 2000. Awareness of the model improved outcomes and lessened the tendency to punish health care workers for inadvertent mistakes made in the context of pressure, chaos and poor system design.

Robert Wachter, Chair of the Department of Medicine at the University of California San Francisco, told The New York Times reading Reason was ‘an epiphany’, almost ‘like putting on a new pair of glasses.’

Reason followed up the Swiss cheese model with another pioneering idea – just culture, the concept of shared personal and organisational responsibility for safety and the open honest communication required to achieve this. It was a follow-up to one of his earlier insights. In his 1990 book, Human error, he wrote, ‘Rather than being the main instigators of an accident, operators tend to be the inheritors of system defects. Their part is that of adding the final garnish to a lethal brew whose ingredients have already been long in the cooking.’

Tributes

Safety and investigation expert Graham Edkins, who worked at Qantas, CASA and the Australian Transport Safety Bureau, says Reason’s contribution to safety investigation was on a global scale but his influence in Australia was particularly early and strong.

Many industries are safer because of James Reason’s work and lots of people travel and work safely without ever realising his influence.

‘Australia was the first jurisdiction in the world to use Reason’s model in an aviation accident investigation (in a 1991 investigation of a near collision at Sydney Airport),’ he said. ‘The then Bureau of Air Safety Investigation adopted it for the Monarch Airlines accident investigation of 1993.’

‘He had an enormous skill to communicate in a way that was non-academic, clear and visual,’ Edkins said.

Graham Braithwaite, Professor of Safety and Accident Investigation at Cranfield University, said Reason would jovially greet him as ‘young Braithwaite’. ‘He had a genius for storytelling, in particular, the way in which he turned complex thinking into what then felt like common sense,’ he said.

Sidney Dekker, author and founder of Griffith University’s Safety Science Innovation Lab, said Reason’s influence would endure, ‘not just in the ideas he shaped, but in the way he taught us to pay attention to the organisational blunt end, to speak and write vividly and to challenge leaders with both conviction and humour’.

Former US National Transportation Safety Board Chairman Robert Sumwalt compared Reason to world-changing scientific pioneers.

‘Some scholars play a critical role in founding a whole field of study: Sigmund Freud in psychology, Noam Chomsky in linguistics, Albert Einstein in modern physics,’ Sumwalt wrote in a 2018 blog post. ‘In the field of safety, Dr James Reason has played such a role.’

Erik Hollnagel, Professor Emeritus of Human-machine Interaction at Linkoping University, Sweden, paid tribute to his friend with a verse from an old Norse poem, Havamål.

Cattle die and kinsmen die, thyself too soon must die, but one thing never, I ween, will die, fair fame of one who has earned.

A life

James Tootle was born in Garston, Hertfordshire, near London, on 1 May 1938. In 1940 his father was killed in a German bombing raid during the World War II Blitz attacks and he was raised by his mother and grandfather, Thomas Augustus Reason, whose surname he adopted.

He initially studied medicine but changed to psychology after noticing a cheerful group of students in a hospital dining room. One of these, Rea Jaari, became his wife in 1964. His initial field of study was airsickness and spatial disorientation, but as he drily noted, it was difficult to find volunteers willing to become nauseated by his experiments.

In 1962, he graduated from the University of Manchester with a degree in psychology. He earnt a doctorate in 1967 from the University of Leicester and joined the University of Manchester in 1977.

He was made a Commander of the Most Excellent Order of the British Empire (CBE) in 2003.

If you’re reading this, it’s possible that you owe your life to his ideas.

‘Jim’ Reason is survived by Rea, daughters Paula and Helen and 3 grandchildren. He also leaves an intangible but powerful legacy that is a priceless gift to everyone who flies. In Sumwalt’s words, ‘If you’re reading this, it’s possible that you owe your life to his ideas.’

Reason for optimism: focus on human excellence

James Reason visited Australia in 2000 to speak at an Australian Aviation Psychology Association symposium. In Flight Safety Australia’s January 2001 edition, he shared his thinking about safety and an emerging topic – resilience – that would become the subject of his last major work, The Human Contribution. His words still make thought-provoking reading 25 years on.

Reason began by pointing out that safety research had traditionally concentrated on what went wrong. ‘There can be little doubt that the dominant tradition is the event-dependent one,’ he said.

He suggested there was equal value on studying things that went right. ‘If human fallibility is a mere truism, what is there that is really interesting about human performance?’ he asked. ‘The answer, I believe, lies on the reverse side of the coin.’

Reason argued that as operators of complex systems, people had an unmatched capacity to adapt and adjust to the surprises thrown up by a dynamic and uncertain world. This included the often remarkable ability to compensate for their own errors.

He cited the ‘Gimli Glider’ accident in Canada as an example of human improvisation that no automated system could match. In that fatality-free event, a Boeing 767 flying over Canada ran out of fuel. The first officer remembered a nearby military runway from his previous air force career and the captain skilfully glided there and landed. In an unprecedented manoeuvre for a heavy aircraft, he sideslipped the unpowered airliner on final to lose height.

After summarising the then-limited research into exceptional performance, Reason concluded:

There would appear to be at least two vital components underpinning both high-reliability organisations and individual excellence:

- a mindset that expects unpleasant surprises

- the flexibility to deploy different modes of adaptation in different circumstances.

He quoted a study of surgery that found all surgeons made errors but high-performing ones compensated well because they had anticipated their own fallibility and mentally rehearsed how to recover.

Systems that strove to attain safety by engineering to replace the human element because of so-called unreliability were making a mistake, he argued.

‘Human variability in the form of moment-to-moment adaptations and adjustments to changing events is also what preserves system safety in an uncertain and dynamic world,’ he said.

Comments are closed.