‘As soon as we think we are safe, something unexpected happens.’– Buddha

A vanishingly improbable avionics flaw happened and caused a flight control crisis. Old-fashioned flight crew skill and experience solved it.

- Airbus A300-300 VH-QPA

- Indian Ocean Near Learmonth, Western Australia

- 12:40:26 Australian Western Standard Time, Tuesday 7 October 2008

Without warning, the warnings started.

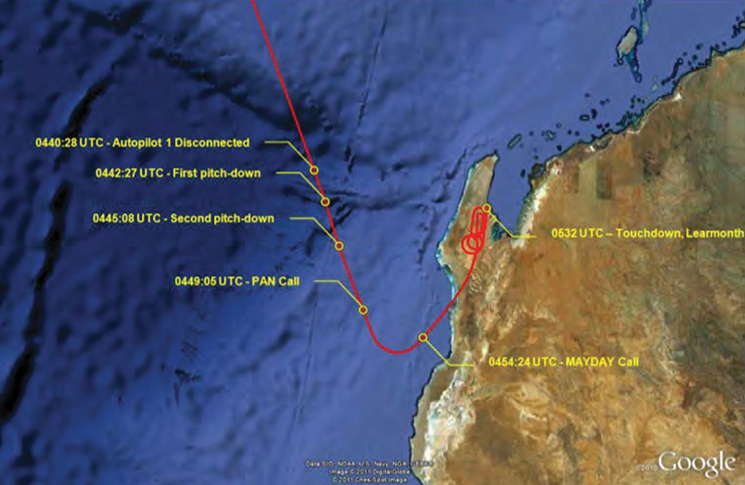

Alarms in the Qantas Airbus A330-300’s cockpit signalled the jet was stalling and had exceeded its maximum speed limit. The autopilot disconnected. Altitude and speed indicators jumped around as the captain’s navigation display went blank.

The 2008 Singapore-Perth flight was at flight level 370, 154 kilometres west of Learmonth, a remote airport in north-west Western Australia, when erroneous data from a key component resulted in the roller-coaster ride that left at least 110 passengers and 9 crew members injured.

A savage pitch down had flung almost all the unrestrained cabin occupants to the ceiling.

Then, just as dazed passengers and crew tried to fathom what had just hit them, it happened again.

The higher injury rate and more severe trauma suffered by dozens of passengers was a price paid for not keeping their seatbelts fastened. Eleven passengers and a crew member were seriously injured while another 39 people required hospital treatment.

The accident generated international headlines, spawned a complex and lengthy Australian Transport Safety Bureau (ATSB) investigation and raised questions about automation in aircraft. It was also one of several incidents that led to changes in training, software and hardware aimed at mitigating the impact of unexpected events.

Although passengers and crew were subjected to the kind of gravitational forces normally associated with severe turbulence, the 7 October 2008 flight had not run into dangerous atmospheric conditions.

Nor was it a case of pilot error. Captain Kevin ‘Sully’ Sullivan was a former US Navy fighter pilot who had landed F-14 Tomcats on the pitching decks of aircraft carriers and attended the famous ‘Top Gun’ Navy Fighter Weapons School.

Sullivan was the first US Navy exchange pilot to be posted to the RAAF and served in the role of combat instructor for the Mirage 3. With 13,592 flying hours under his belt, including 7,505 in command of various jets and 2,453 on an A330, he was trained to handle unexpected events and had done so in the past. But even he was unprepared for the way the A330’s sophisticated automated flight control system suddenly locked out its pilots and put the aircraft into a dive.

A savage pitch down had flung almost all the unrestrained cabin occupants to the ceiling.

Fight or flight

He would later describe it as a ‘psychotic takeover’ that shocked the flight crew and saw an initial two-second plunge of 150 feet produce a peak vertical acceleration of minus 0.8g – higher in the rear of the cabin.

‘You’re all hyped up with adrenalin plus all the other chemicals that your body is producing,’ Sullivan says of the experience. It’s a primal body response but it can cause a lot of problems. And I had to manage that in myself and my co-pilot after the first and second pitch downs.’

ATSB investigators found the aircraft’s flight control primary computers, called PRIMs by pilots, commanded the nose-down pitch because of a software design limitation and corrupted data supplied by another system.

The faulty data came from one of 3 air data inertial reference units (ADIRUs) that feed critical information about the state of the aircraft and the outside environment to the 3 PRIMs.

Each ADIRU has 2 parts: an air data reference component and an inertial reference component.

Sensors supply the air data component with raw data used to calculate parameters such as airspeed, pressure, altitude and angle of attack, while the inertial reference unit measures parameters such as acceleration and positional information.

Investigation AO-2008-070. Published 7 October, 2008.

The first sign something was awry was when ADIRU-1 sent erroneous data spikes to the flight control primary computers and prompted one of the A330’s 2 autopilots to disconnect.

Sullivan initially switched to the second autopilot, but the faulty ADIRU continued to feed incorrect data to the flight computer, prompting the false stall and overspeed warnings, as well as several electronic centralised aircraft monitor (ECAM) alerts.

Uneasy about the continuing warnings and alarms, he disengaged autopilot 2 and reverted to manual control while calling for first officer Peter Lipsett to return to the flight deck.

Hand flying at 37,000 feet is a delicate operation, Sullivan says. ‘Just holding straight and level is like balancing on the head of a pin,’ he says. ‘Even though it’s easily controlled, you basically fly with 2 fingers and it’s very twitchy.’

Hand flying at 37,000 feet is a delicate operation.

Investigators would later discover that ADIRU-1 was generating ‘very high, random and incorrect values’ for the aircraft’s angle of attack (AoA), a critically important flight parameter.

Every pilot knows the risk of an aerodynamic stall if you let the AoA, the angle at which the oncoming airflow meets the wing, get too high.

The A330’s automated protections are designed to keep the aircraft flying within an envelope that prevents a stall but a key to this is the information provided by the ADIRUs. Having 3 ADIRUs provides redundancy and fault tolerance, allowing the PRIMs to compare information from all 3 to check consistency.

But in this case, a specific pattern of incorrect values provided by ADIRU-1 indicated the AoA was too high, prompting the flight control computers to pitch down the aircraft’s nose by 8.5 degrees.

Worse still, Sullivan’s attempts to counter the move with aggressive use of the sidestick pilots use to control Airbus aircraft had no effect for almost 2 crucial seconds in the first pitch down and longer for the second.

After his initial full backstick input was blocked by the computers, he released the sidestick and used small nose-up manual inputs until manual control was re-established, and he could return the A330 to 37,000 feet.

As the flight crew responded to an ongoing cascade of the warnings and tried to reset the PRIM, the computers again pushed down the nose. This time it was by a less severe but still frightening 3.5 degrees.

The crew were unsure about which systems on the aircraft were working but Sullivan identified the PRIM as a likely culprit and decided not to engage it again.

He ordered a mayday and employed a high-energy, high-rate-of-descent approach learnt during his naval career to safely land the crippled aircraft at Learmonth.

His initial full backstick input was blocked by the computers.

ATSB investigators praised the flight crew’s response to the cacophony of alarms and the nosedives as demonstrating professionalism and sound judgement.

The detailed investigation found incorrect ADIRU data spikes were due to intermittent problems in the unit’s central processing unit (CPU) that saw data incorrectly packaged and labelled.

Spikes indicating a high angle of attack were sent to the PRIMs as valid data because of a limitation on the unit’s built-in test equipment.

A secondary defence, an algorithm in the PRIMs designed to detect and manage incorrect information from an ADIRU, was tripped up by the pattern of spikes.

Although generally effective, the algorithm couldn’t manage a scenario where data spikes were 1.2 seconds apart. The QF72 incident was the only known example where this design limitation led to a pitch-down command in over 28 million flight hours on A330/A340 aircraft.

The erroneous angle of attack data activated the aircraft’s defence against a high AoA, as well as a mechanism designed to stop the aircraft from pitching up.

The 2 combined to produce a 10-degree nose-down elevator command that was close to the highest pitch-down command available, according to the ATSB.

By the time the bureau’s final report was released, Airbus had already released a new crew procedure and redesigned the algorithm to prevent the same type of accident from happening again.

The lessons learnt were also applied to the company’s aircraft fleet beyond the A330 and incorporated in the design principles of its flight computers.

The question of what triggered the Northrop Grumman LTN-101 ADIRUs to mislabel the data proved more complex.

Other times

The ATSB found 3 examples where this type of ADIRU had malfunctioned, twice on the same aircraft and once on another Qantas aeroplane.

All 3 occurred off the coast of WA, prompting media speculation about the role of the Harold E Holt Naval Communication Station near Exmouth, that was later dismissed.

Investigators said the data spikes that affected QF72 were probably generated when the ADIRU’s central processor unit combined the data value from one parameter with the label for another. It had confused altitude data with AoA data, transmitting data to other systems without flagging them as invalid and activating the aircraft’s automatic protection against a stall.

What caused the data spikes was never pinned down but there was speculation it was a ‘single-event effect’ resulting from high-energy particles striking a CPU’s integrated circuit.

For Sullivan, losing control of the aircraft resulted in ongoing stress and he left flying in 2016, when he lost his battle with post-traumatic stress disorder.

‘The automation is great when it works but if it doesn’t work, what do you do?’

He chronicled his experiences in a 2019 book, No Man’s Land – The Untold Story of Automation on QF72, which warned of the pitfalls of relying too much on automation and relinquishing control to computers.

He says much of what happened was not discussed in the manufacturer’s manuals and he has pushed for more realistic pilot training modules ‘attuned to events that have provided data’ such as QF72.

‘I’m concerned about pilot training and what airlines do and don’t do for their pilots,’ he told Flight Safety Australia. ‘Certainly, in the case of QF72, I didn’t learn anything from my airline training that assisted me on that date. It was all from my military training and experience.

‘It was an extreme event. There was a lot of startle and there was a lot of confusion, there was a lot of overload and there was a cascading sequence of failures we had to deal with. Plus, there was loss of control.’

Sullivan says dedicated loss-of-control training in the US Navy meant it was instinctive for him to release the pressure on the sidestick, something he believes would not come naturally to most pilots.

Subsequent events involving both Boeing and Airbus aircraft, including Air France Flight 447 and the 2 Boeing 737 MAX crashes, have further fuelled his worries about automated flight technology.

‘The automation is great when it works but if it doesn’t work, what do you do?’ he asks.

Qantas has changed to ADIRUs manufactured by Honeywell and installed new Airbus software aimed at preventing a single rogue AoA value being accepted by the flight control system, as well as a new system called Backup Speed Scale.

It says it also added new flight crew checklists and procedures. Airbus issued an Operations Engineering Bulletin explaining the background changes and revised procedures to crew.

Upset and recovery

Incidents such as a QF72 and AF447 also produced an industry-wide change in Australia, the introduction of extended envelope training, more commonly known as upset prevention and recovery training (UPRT).

The Australian UPRT program is aligned with the focus of the US Federal Aviation Administration and covers aircraft with 30 seats and above and a maximum take-off weight greater than 8,618 kilograms. There is also a provision to mandate this training for other operations where necessary.

This meant that by 31 March 2022, all operators of large aircraft needed to have UPRT in their flight crew conversion and recurrent training programs.

Human factors topics covered include situation awareness, startle and surprise response and threat and error management.

CASA amended flight simulator requirements and flight training requirements to ensure adequate fidelity and instructor feedback tools outside the normal flight envelope.

Qantas says UPRT is embedded in its training system and that it includes modules covering system failure and mode degradation scenarios as well as more extreme unusual altitudes and a full stall.

For its part, Airbus argues automation has clearly played a key role in enhancing safety and reducing accidents and says it is constantly looking at ways to improve and advance its range of products to deliver higher levels of safety, efficiency and performance.

It says it is also committed to ensuring control ultimately rests with the flight crew. ‘We are committed to pioneering new solutions that will continue to support flight crews and deliver increased levels of safety. As we do so, we are in constant dialogue with our customers and the authorities,’ Airbus told Flight Safety Australia.

Veteran pilot and former CASA flight simulation team leader John Frearson recently published a book, Operational Safety for Aviation Managers: Practice Beyond the Theory, which uses historical examples to look at time-critical reactions by pilots.

Frearson says it’s unlikely a scenario such as QF72 would be a centrepiece of pilot training because it was a rare ‘black swan’ event and not an incident the crew could have avoided by thinking ahead.

But he agrees there’s value in including black swan events in training. ‘I’m a great believer in scenario-based training,’ he says.

Another question raised by the lead investigator on the complex probe into the QF72 event, Mike Walker, relates to the aircraft maintenance engineers responsible for identifying and guarding against system failure modes.

While Walker sees flight automatic protections as a benefit that usually works well, he says the onus is on manufacturers and developers to identify failure scenarios.

‘We were interested in how do the systems engineers design one of these systems,’ he says. ‘How did they conceive of all these different failure modes or build a system that could handle failure modes whether they could be conceived or not?’

Walker says no one individual can imagine the complexity involved in these aircraft systems and investigators raised questions about who was monitoring the systems engineers and what human factors tools they could use to find flaws.

He believes this has become more important as aircraft have increased in complexity.

‘Increasing use of technology and computer systems has undoubtedly made aviation safer,’ he says. ‘However, one thing we learnt from this is that increasing the complexity of your design can increase the risk.’